Transforming Media

Currently, I am deeply engaged in media transformations, both in my dissertation and in a project with Michael Johansson (Wandering Landscape Machine). My interest lies not in the isomorphic transfer of one media technology to another – an impossible feat – but rather in the creative and aesthetic potential that lays in the disruptions, signal losses, and noise that occur during these transformations. Inspired by ETA Hoffmann, who elevated this phenomenon to a poetic principle in the early 1800s, this project explores the transformation of images into music.

The tool we are developing will eventually integrate into the Wandering Landscape Machine artistic resaerch template to enable the machine to produce (shadow) images as well as generate sounds from these images. Unlike my Camera to MIDI project, this tool will work with static images and aims to perform more efficiently while employing different probing approaches.

Technical Approach

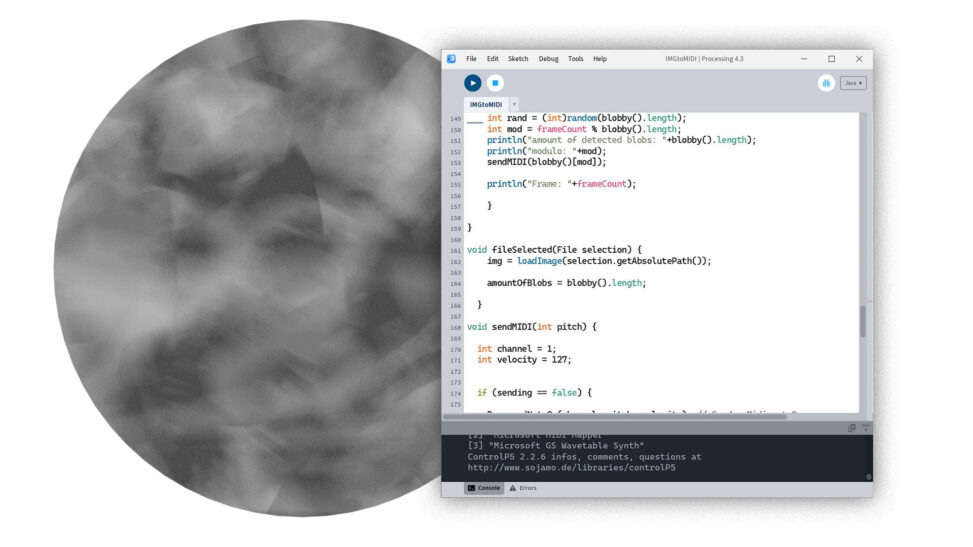

A static image is “probed” at specific critical points to generate MIDI signals (and thus sounds) from these spots. Two primary methods are employed:

- Blob Detection: We locate local contrast points within the image. The x and y coordinates of these points (“blobs”) are then converted into corresponding MIDI signals. For the blob detection I use theblobdetction library by v3ga.

- Phonograph: Given the specific visual style of the Landscape Wandering Machine (square images with circular content), the color values are read in a spiral pattern (similar to a needle on a vinyl record player/phonograph). These “probed” color values will then be converted to generate MIDI control signals, while the steepness of the spiral curve will be adjustable.

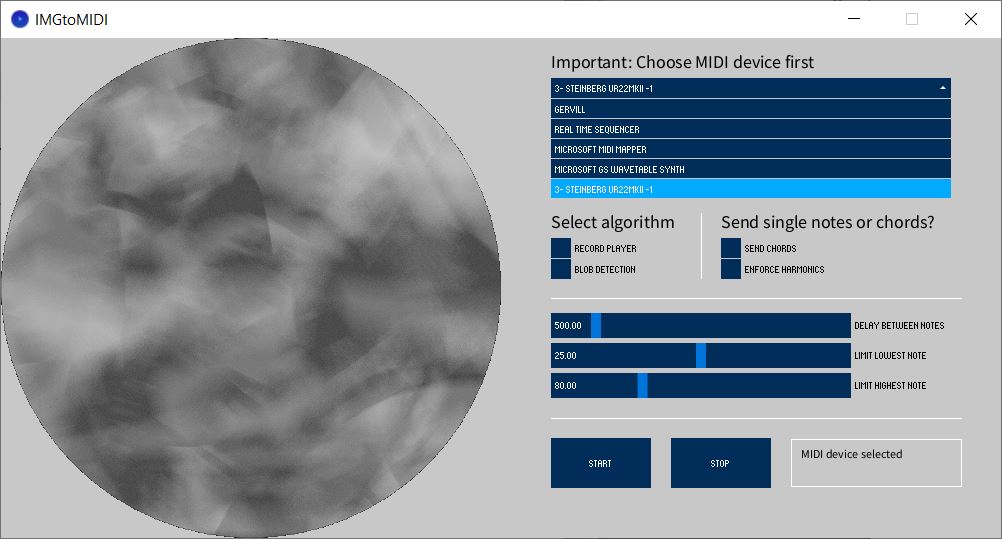

Example of a shadow image, produced by the Wandering Landscape Machine. Since all images feature this overall shape it is crucial to limit the phonograph-function to the content of the circle.

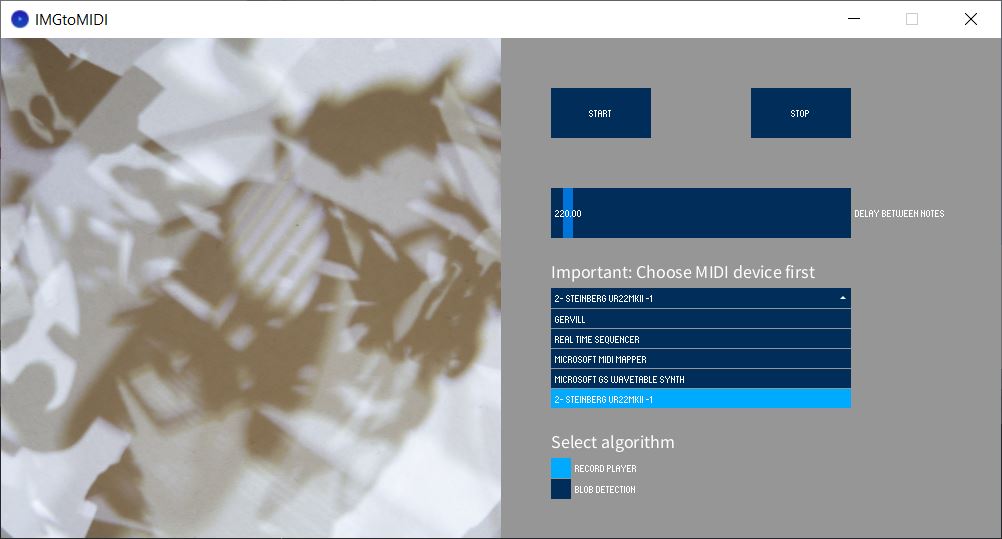

Since the tool will feature a small GUI and needs to run as a standalone *.exe, I chose Processing over Python. Processing’s/Java’s cp5 library allows for quick and efficient creation of a basic prototype.

Transforming Image Data to MIDI Signals

Transforming image data into MIDI signals is merely the first step. “Real” music is only produced when these MIDI signals are converted into actual sounds using a synthesizer. As we plan to use an external hardware synthesizer, the tool will detect all available MIDI devices on the system and prompt the user to select the desired MIDI interface.

Observations and Learnings

MIDI Panic Message: Sending a MIDI Panic Message via the Processing library midibus to immediately send NoteOff on all channels (MIDI CC 120 or CC 123) isn’t straightforward. I created a workaround by looping a NoteOff message to all possible 127 notes. Suggestions for a better solution are very welcome.

// hacky "MIDI panic" command (sends a noteOff to all pitches in channel 1

for (int j=1; j<127; j++) {

myBus.sendNoteOff(1, j, 127); // Send a Midi nodeOff

println("sending MIDI panicOff to: "+j);

}👉 Update to this problem: I found a solution to send MIDI controller changes (and therefore also MIDI Panic messages) via themidibus. The trick is to set the last int to 0:

// send a CC 120 ("All Sound Off")

myBus.sendControllerChange(1, 120, 0);

// send a CC 123 ("All Notes Off")

myBus.sendControllerChange(1, 123, 0);Although CC 120 seems to be the ultima ratio that should mute all sounds (regardless of release time and sustain) I observed in around 5% of all test cases that some sounds still kept playing at the synthesizer, even after sending CC 120. To ensure that all sounds are reliable cut off when STOP is triggered, I use a combination of my “hacky” for-loop that sends NoteOffs by iterating through all possible MIDI pitch values and sending a CC 120 in parallel.1

Compatibility Issues: Midibus has compatibility issues with the Arturia Microfreak when connected via USB as an MIDI interface. While MidiBus.list() recognizes the device, it seemingly ignores all MIDI commands sent to it. That’s why I am using the Steinberg UR 22 mkII audio/MIDI interface at the moment to supply MIDI signals to the Microfreak via traditional DIN cables.

Windows vs. Mac OS/X: Midibus doesn’t recognize my Swissonic USB-MIDI 1×1 interface on Windows, but it works fine on Mac OS/X. I suppose this seems to be a issue with the device itself, since there are some reports online that it is quite picky in terms of the mainboard’s chipset. (Maybe there is also an issue with the drivers – some people suggested that the software from Roland UM-1G can also be used with the Swissonic USB-MIDI 1×1. I need to check this out in the future.)

Threading and Synchronization: Implementing threading in Processing seems to be fairly straightforward by threads("[name of function]"), but it quickly desynchronizes the timing between functions, which is devastating in a time-critical environment such as music generation. That’s why the script currently runs on a single thread.

NoteOn and NoteOff Commands: To ensure that the synthesizer produces meaningful sound, a delay is necessary between each NoteOn and NoteOff command otherwise each note is immediately muted after triggering. Although most code examples of midibus use Processing’s delay() function, this approach seems ineffective as it halts the entire sketch, including the user interface. To avoid this, I developed a function using millis() to adjust the delay between NoteOn and NoteOff via a slider.

Conclusion

The tool is open source and still very much (⚠) a work in progress. The code is available on GitHub.

JavaScript Rewrite

The longer I approach this task using Processing, the clearer it becomes that Processing is no longer the tool of choice. In particular, the GUI library controlP5 has not seen significant development since 2015, making it difficult to determine whether certain bugs are due to errors in my own code or incompatibilities between the current version of Processing and its libraries.

For this reason, I have decided to rewrite the project from scratch in JavaScript and convert it into a web tool using some HTML and CSS. This approach offers the following advantages:

- The tool itself is extremely small (less than 40kB) and requires no external libraries (in other words, the tool consists solely of vanilla-JS, HTML, and CSS, all packaged into a single file).

- Using HTML and CSS allows for a far more elaborate GUI design. Additionally, the native resolution of the output device can be utilized without embedding pixelated fonts, as is often necessary in Processing.

- The tool is easy to share: all that is required is to access a webpage.

- The tool is compatible with any system that can run a modern browser (currently tested on Windows and OSX).

- The performance is significantly improved (though this is partly due to the fact that the Processing version was implemented with some rather questionable solutions).

- Probing can finally be visualized, and it’s now possible to display in real time which probing coordinate corresponds to the currently played MIDI signal.

- A significantly greater number of parameters can be adjusted via the GUI (e.g., the number of probing points, the spiral’s growth factor, or its origin point).

- The chord mode now offers significantly more features, including support for all major chords and an arpeggiator.

Limitations:

- As before, the Arturia Microfreak is not recognized when connected via USB. However, the Steinberg UR22 mkII is detected without any issues.

- I have temporarily decided to forgo blob detection to avoid overloading the tool. Currently, probing is done using the “phonograph method”.

- The tool works without any issues on Firefox but the system dialogue that prompts the user to allow MIDI access is worded quite poorly.

- Again, the stop all notes (“MIDI panic”) needs some tweaks to function properly.

// Send "All Notes Off" control change message for each channel (0xB0 is Control Change on channel 1)

for (let i = 0; i < 16; i++) { // Send to all channels

selectedMidiOutput.send([0xB0 + i, 123, 0]); // 123 is the "All Notes Off" controller

}

Comments are closed.